5-minute Paper Review: Evolutionary Stochastic Gradient Descent | by Enoch Kan | Towards Data Science

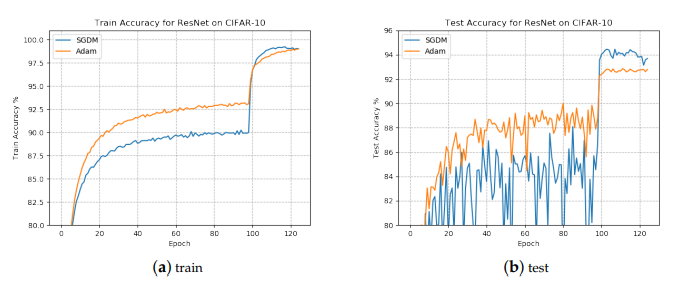

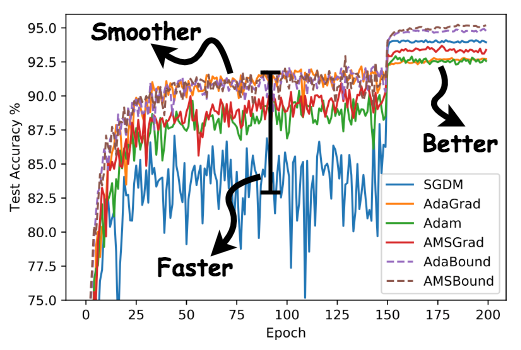

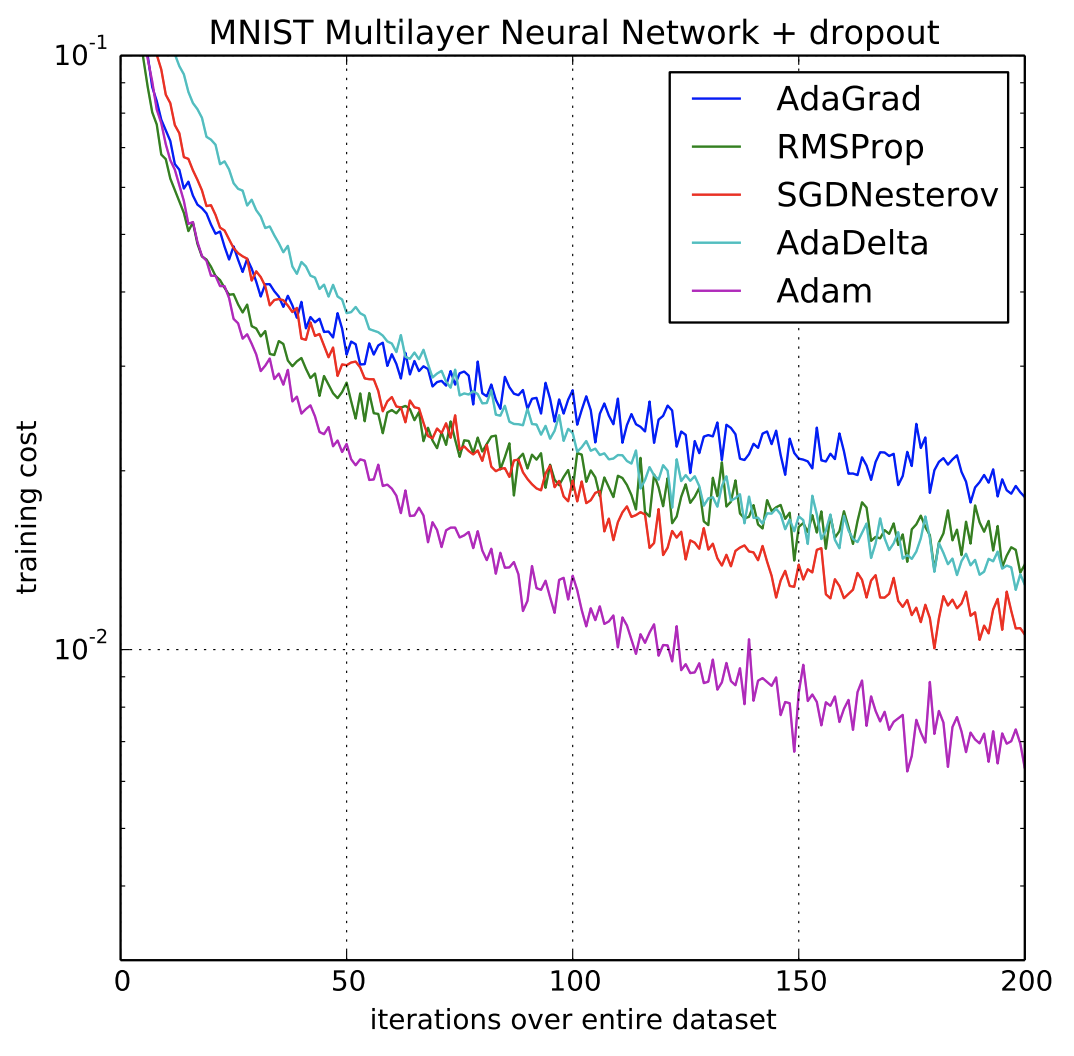

The effect of choosing optimizer algorithms to improve computer vision tasks: a comparative study | Multimedia Tools and Applications

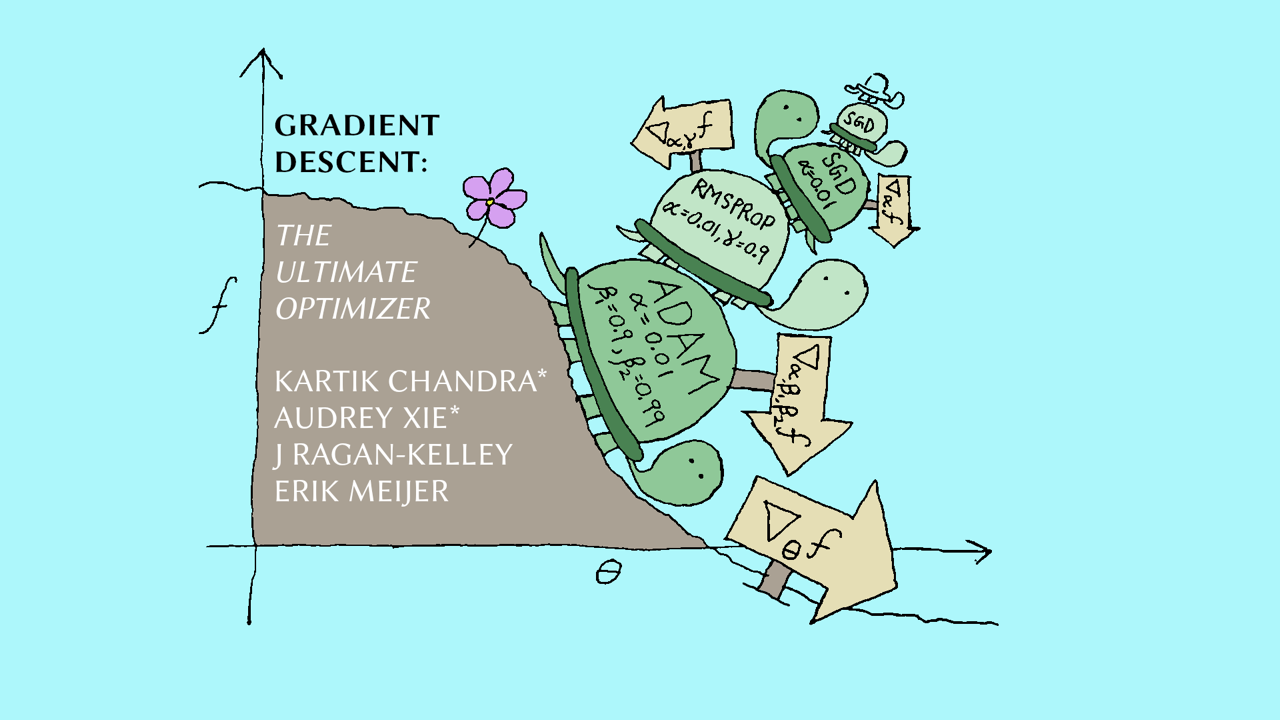

NeurIPS 2022 | MIT & Meta Enable Gradient Descent Optimizers to Automatically Tune Their Own Hyperparameters | Synced

![R][D] Hey LOMO paper authors, Does SGD have optimizer states, or does it not? : r/MachineLearning R][D] Hey LOMO paper authors, Does SGD have optimizer states, or does it not? : r/MachineLearning](https://preview.redd.it/r-d-hey-lomo-paper-authors-does-sgd-have-optimizer-states-v0-b0dj2nzscumb1.png?width=1055&format=png&auto=webp&s=d25d6584bc419464374060db783f9081b4e44388)

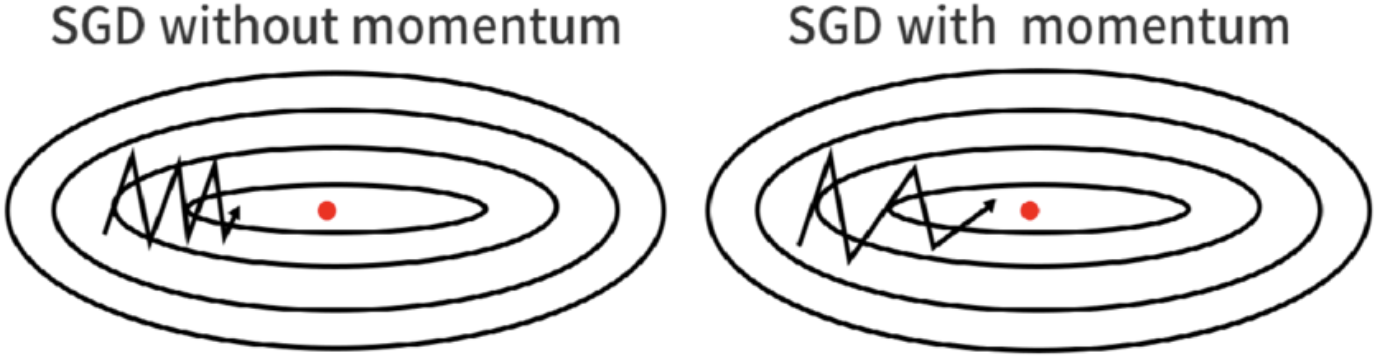

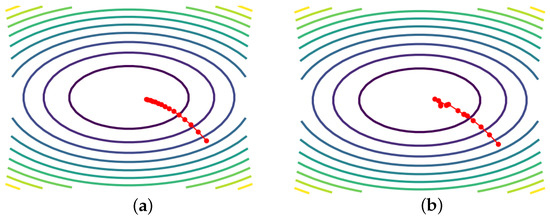

![PDF] SGD momentum optimizer with step estimation by online parabola model | Semantic Scholar PDF] SGD momentum optimizer with step estimation by online parabola model | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/efde62b2601b65b77321a81a8acfeef2283e624d/1-Figure1-1.png)

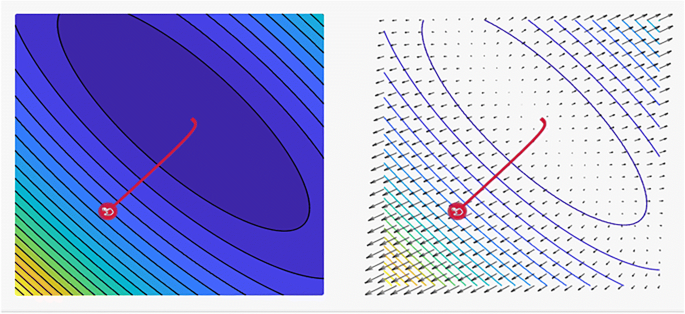

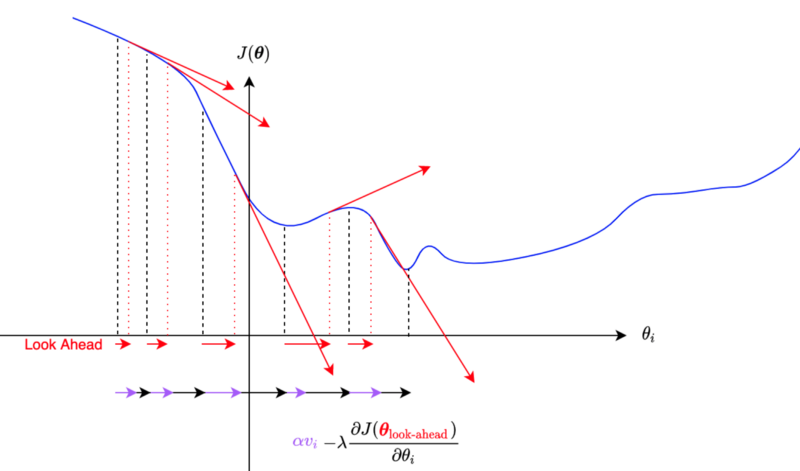

![PDF] Lookahead Optimizer: k steps forward, 1 step back | Semantic Scholar PDF] Lookahead Optimizer: k steps forward, 1 step back | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/600be3dde18d1059c6b56170bd04ee65ce79a848/2-Figure1-1.png)